Developer Portal

Welcome to the KYT Developer Portal! Here you will find resources to help you integrate with the KYT API, including quickstarts, workflows, use-cases, tutorials, and many other resources.

Register a transfer with cURL

Learn how to register a received transfer using the POST /v2/users/{userId}/transfers endpoint.

Before you begin

Ensure you create an API key before you continue to the steps below.

Add your headers

For the POST /v2/users/{userId}/transfers endpoint, use the Token header for your API key and a Content-type: application/json header to indicate you're sending JSON content. The following is an example:

curl -X POST 'https://api.chainalysis.com/api/kyt/v2/users/{userId}/transfers' \

--header 'Token: {YOUR_API_KEY}' \

--header 'Content-type: application/json' \

Create a user id

Use the userId path parameter to create a unique identifier for this user, such as user0001. For more information, see User IDs.

Create your request body

The properties you need to send in your request body depend on the asset's tier. Transfers on mature and emerging networks require at a minimum the following properties:

networkassettransferReferencedirection

In this example, you register a received transfer of the asset ETH on the Ethereum network. The transferReference is a combination of the transaction hash (0xdd6364536f5f05cc1ea75709b676e2b1b37fad2792d3a71fb537db13100fc6b8) and receiving address, separated by a :. Since your platform received this transfer, the output address is an address you control (0x5cc17d0fa620FE99dAEAa87365C63b453BC47664) and the direction is received.

Using the information above, the following is an example of a properly formatted registration call:

curl POST 'https://api.chainalysis.com/api/kyt/v2/users/user0001/transfers' \

-H 'Token: {YOUR-API-KEY}' \

-H 'Content-Type: application/json' \

--data-raw '{

"network": "Ethereum",

"asset": "ETH",

"transferReference": "0xdd6364536f5f05cc1ea75709b676e2b1b37fad2792d3a71fb537db13100fc6b8:0x5cc17d0fa620FE99dAEAa87365C63b453BC47664",

"direction": "received"

}'

Assess your response

If properly formatted, you should received a 202 code with a response similar to the following:

{

"updatedAt": null,

"asset": "ETH",

"network": "ETHEREUM",

"transferReference": "0xdd6364536f5f05cc1ea75709b676e2b1b37fad2792d3a71fb537db13100fc6b8:0x5cc17d0fa620FE99dAEAa87365C63b453BC47664",

"tx": null,

"idx": null,

"usdAmount": null,

"assetAmount": null,

"timestamp": null,

"outputAddress": null,

"externalId": "2774e6d5-aafe-3a26-ad0b-7812c295cb48"

}

Save the externalId for future use. You will need it to identify the transfer when retrieving alerts, exposure, or identifications.

Verify KYT processed your transfer

KYT indicates it has processed your transfer by returning a non-null value for updatedAt. Since it takes a number of seconds to process, updatedAt typically returns null immediately after registering the transfer. To check whether the transfer was processed, call the GET /v2/transfers/{externalId} summary endpoint with your stored externalId until updatedAt returns a timestamp. After KYT processes your transfer, the other properties (assetAmount, timestamp, tx, and others) should also populate.

Below is an example request body from the summary endpoint for the above transfer:

{

"updatedAt": "2022-06-30T16:43:53.172475",

"asset": "ETH",

"network": "ETHEREUM",

"transferReference": "0xdd6364536f5f05cc1ea75709b676e2b1b37fad2792d3a71fb537db13100fc6b8:0x5cc17d0fa620FE99dAEAa87365C63b453BC47664",

"tx": "dd6364536f5f05cc1ea75709b676e2b1b37fad2792d3a71fb537db13100fc6b8",

"idx": 0,

"usdAmount": 2744.02,

"assetAmount": 2.6773581135091926,

"timestamp": "2018-01-18T07:26:28.000+00:00",

"outputAddress": "5cc17d0fa620fe99daeaa87365c63b453bc47664",

"externalId": "2774e6d5-aafe-3a26-ad0b-7812c295cb48"

}

What's next

To learn more, check out the following resources:

Register a withdrawal attempt with cURL

Learn how to register a withdrawal attempt using the POST /v2/users/{userId}/withdrawal-attempts endpoint.

Before you begin

Ensure you create an API key before you continue to the steps below.

Format your headers

For the POST /v2/users/{userId}/withdrawal-attempts endpoint, use the Token header for your API key and a Content-type: application/json header to indicate you're sending JSON content. The following is an example:

curl -X POST 'https://api.chainalysis.com/api/kyt/v2/users/{userId}/withdrawal-attempts' \

--header 'Token: {YOUR_API_KEY}' \

--header 'Content-type: application/json' \

Create a user id

Use the userId path parameter to create a unique identifier for this user, such as user0002. For more information, see User IDs.

Specify the address and hash format

Use the formatType query parameter and specify either to_display or normalized to determine the format in which crypto addresses and transaction hashes are returned in the response JSON. For example, append formatType=normalized to specify that all addresses and hashes return in a normalized format:

https://api.chainalysis.com/api/kyt/v2/users/{userId}/withdrawal-attempts?formatType=normalized

To learn more about the differences between these formats, see Address and hash formats.

Create your request body

In your request body, supply at least the following request body properties:

networkassetaddressattemptIdentifierassetAmountattemptTimestamp

In this example, you register a withdrawal attempt for five BTC on the Bitcoin network. The attempt is made to the address 1EM4e8eu2S2RQrbS8C6aYnunWpkAwQ8GtG. Create identification for the attempt with a unique attemptIdentifier.

Using the information above, the following is an example of a properly formatted registration request:

curl -X POST 'https://api.chainalysis.com/api/kyt/v2/users/user0002/withdrawal-attempts?formatType=normalized' \

--header 'Token: {YOUR_API_KEY}' \

--header 'Content-type: application/json' \

--data '{

"network": "Bitcoin",

"asset": "BTC",

"address": "1EM4e8eu2S2RQrbS8C6aYnunWpkAwQ8GtG",

"attemptIdentifier": "attempt1",

"assetAmount": 5.0,

"attemptTimestamp": "2020-12-09T17:25:40.008307"

}'

Assess your response

If the request was successful, you should receive a 202 code with a response similar to the following:

{

"asset": "BTC",

"network": "BITCOIN",

"address": "1EM4e8eu2S2RQrbS8C6aYnunWpkAwQ8GtG",

"attemptIdentifier": "attempt1",

"assetAmount": 5.0,

"usdAmount": null,

"updatedAt": null,

"externalId": "e27adb25-7344-3ae3-9c80-6b4879a85826"

}

Save the externalId for future use. You will need it to identify the withdrawal attempt when retrieving alerts, exposure, or identifications.

Verify KYT processed your withdrawal

KYT indicates it has processed your withdrawal by returning a non-null value for updatedAt. Since it takes a number of seconds to process, updatedAt typically returns null immediately after registration. To check whether the withdrawal was processed, call the GET /v2/withdrawal-attempts/{externalId} summary endpoint with your stored externalId until updatedAt returns a timestamp. After KYT processes your withdrawal, usdAmount should also populate.

Below is an example request body from the summary endpoint for the above withdrawal:

{

"asset": "BTC",

"network": "BITCOIN",

"address": "1EM4e8eu2S2RQrbS8C6aYnunWpkAwQ8GtG",

"attemptIdentifier": "attempt1",

"assetAmount": 5.0,

"usdAmount": 5000.00,

"updatedAt": "2022-04-27T20:21:43.803+00:00",

"externalId": "e27adb25-7344-3ae3-9c80-6b4879a85826"

}

Register for continuous monitoring

You can also register the withdrawal attempt as a sent transfer. Doing so will configure KYT to continuously monitor the transfer for updates in exposure and identifications, generating alerts according to your risk settings. To register the transfer as sent follow the steps in Quickstart: Register a transfer, but this time indicate the direction as sent in the request body.

What's next

To learn more, check out the following resources:

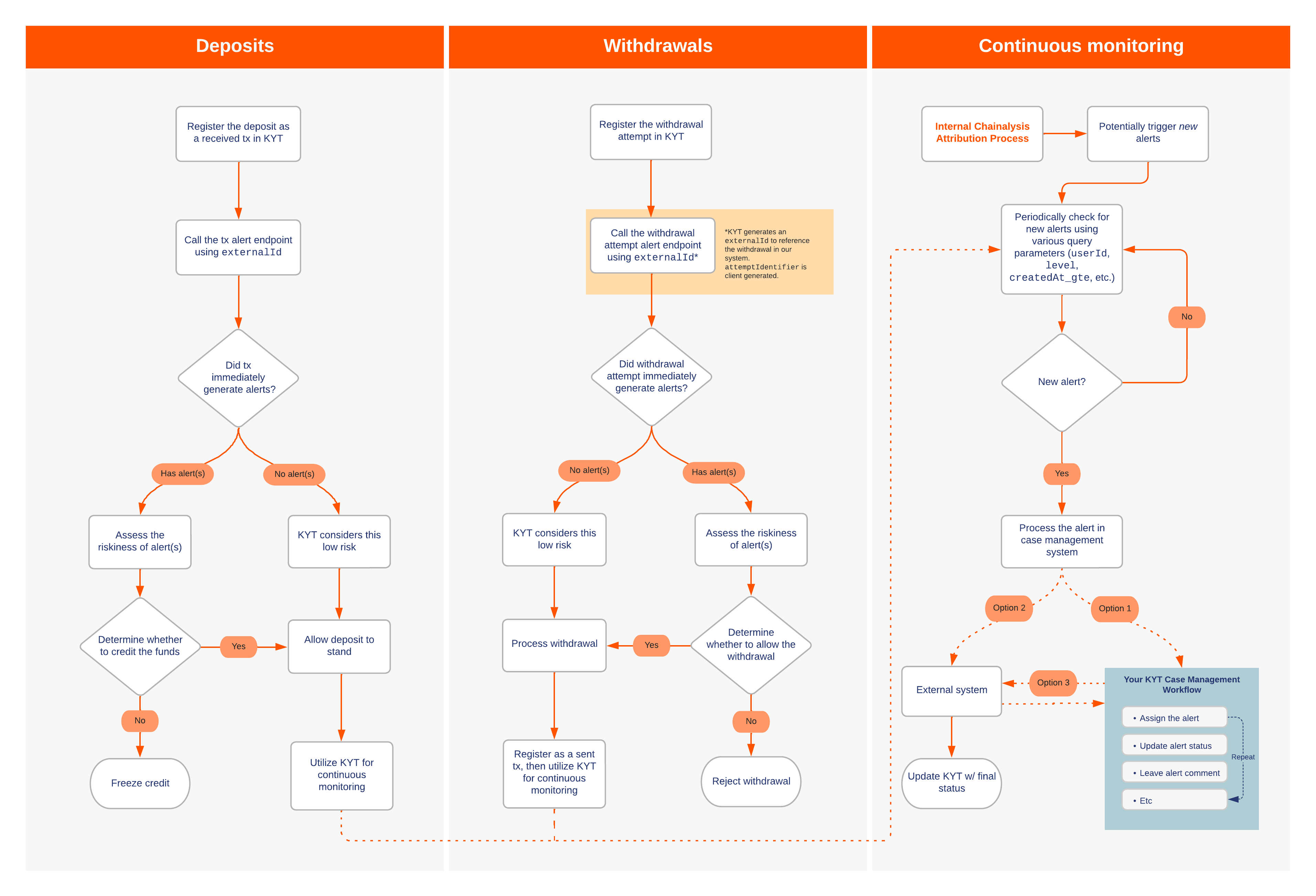

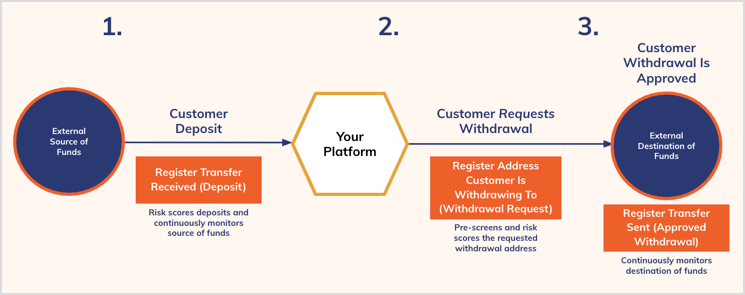

Workflows

The API implementation guide details three common use cases:

- Crediting a user's funds upon deposit

- Processing a user's withdrawal attempt

- Retrieving alerts for transaction monitoring

This diagram shows a general overview of common KYT workflows. For information on which endpoints to use, and in what order, see the procedures below.

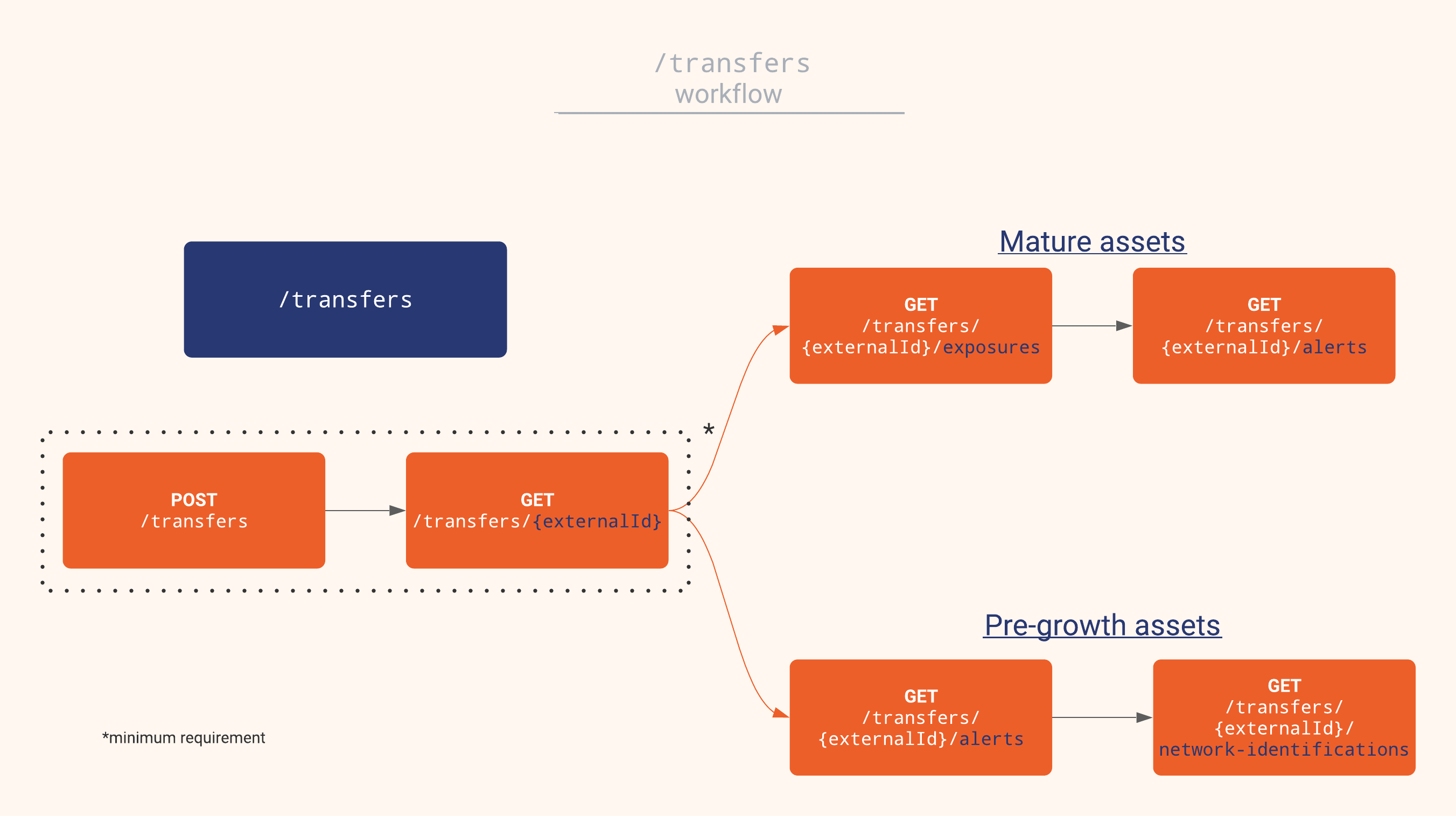

Crediting a user’s funds

Typically, services do not have control over received transfers. This guide details how to use KYT data to take programmatic action when receiving a user deposit. Outlined below is an example describing how to use the KYT API to navigate received deposits.

When you receive a user deposit:

- Call the

POST /v2/users/{userId}/transfersendpoint. Be sure to indicate the"direction"as"RECEIVED"in the request body. If successful, you will:- Receive a 202 response.

- Receive an external identifier (

externalId). Store this external identifier in your system for later use.

Call the

GET /v2/transfers/{externalId}summary endpoint using theexternalIdreceived in step 1. You will need to poll this endpoint untilupdatedAtis no longernull. Once populated, KYT generates alerts according to your organization’s alert rules.Note: How quickly the

updatedAtfield populates depends on how many confirmations Chainalysis requires before processing transactions for a given asset. Some require fewer confirmations or are quicker than others. Learn more about polling the summary endpoints here.Once the

updatedAtfield populates, determine whether the asset is part of a mature or emerging network or pre-growth network and follow the corresponding procedure below.

User deposits for mature and emerging assets

With mature and emerging assets, you can retrieve the following additional information about the transfer, if available:

- Direct exposure information

- Alerts specific to the transfer

To retrieve additional information about the received transfer:

- Call the

GET /v2/transfers/{externalId}/exposuresendpoint to retrieve any available direct exposure information. - Call the

GET /v2/transfers/{externalId}/alertsendpoint to retrieve any generated alerts specific to this transfer.

Learn more about retrieving alert data for ongoing transaction monitoring here.

User deposits for pre-growth assets

For pre-growth assets, you can retrieve the following additional information about the transfer, if available:

- Alerts specific to the transfer

- Network Identifications - learn more about Network Identifications here.

To retrieve additional information about the received transfer:

- Call the

GET /v2/transfers/{externalId}/alertsendpoint to retrieve any generated alerts specific to this transfer. - Call the

GET /v2/transfers/{externalId}/network-identificationsendpoint to retrieve the counterparty name for any asset and transaction hash matches.

Learn more about retrieving alert data for ongoing transaction monitoring here.

Note: Depending on the transfer, direct exposure information may be available. You can check by calling the GET /v2/transfers/{externalId}/exposures endpoint.

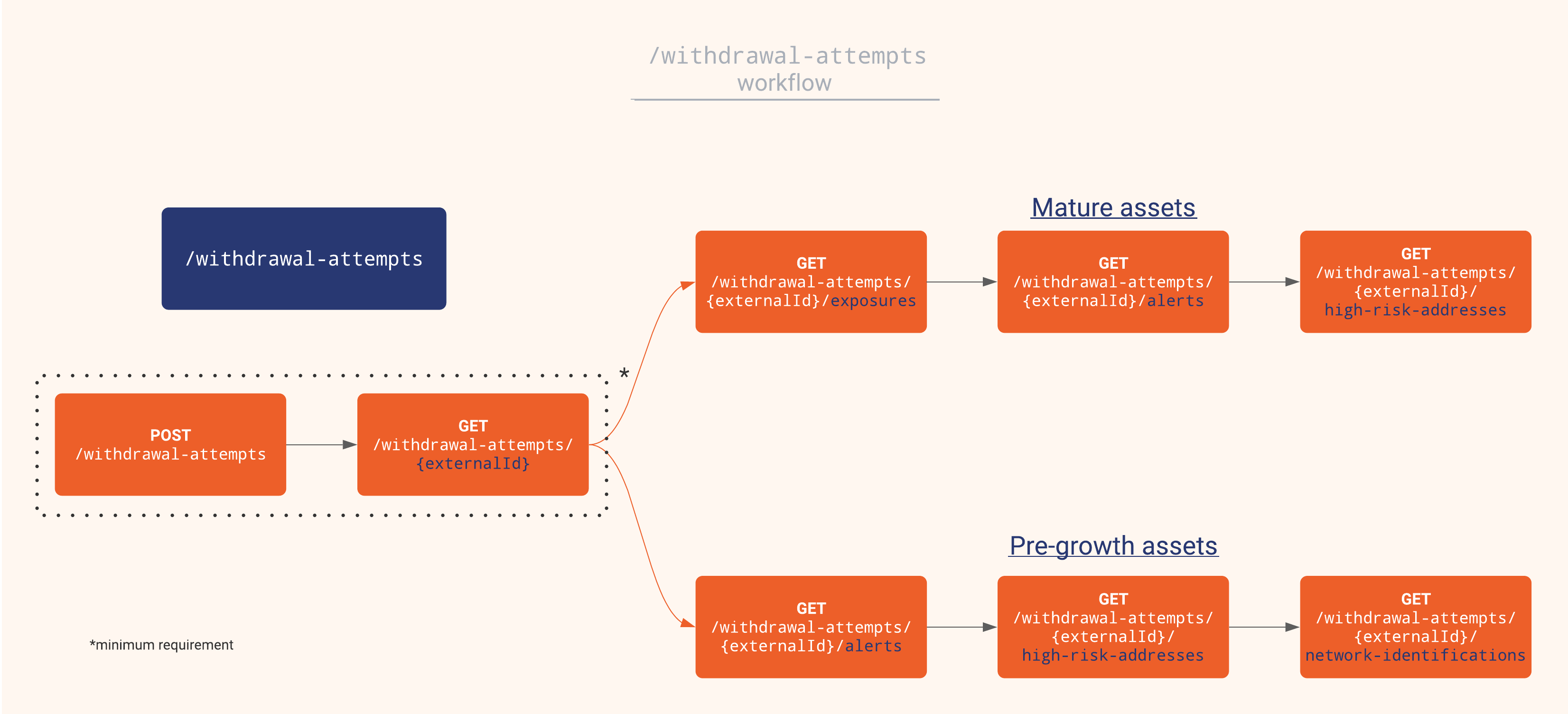

Processing withdrawals

Sometimes services do not have information about the counterparty where their users attempt to make a withdrawal. This guide details how to use KYT data to take programmatic action when users attempt withdrawals. Outlined below is an example describing how to use the KYT API to navigate withdrawal attempts.

When a user attempts a withdrawal:

- Call the

POST /v2/users/{userId}/withdrawal-attemptsendpoint. If successful, you will:- Receive a 202 response.

- Receive an external identifier (

externalId). Store the external identifier in your system for later use.

- Call the

GET /v2/withdrawal-attempts/{externalId}summary endpoint, using theexternalIdreceived in step 1. You will need to poll this endpoint untilupdatedAtis no longernull. Once populated, KYT generates alerts according to your organization’s alert rules. - Once the

updatedAtfield populates, determine whether the asset is part of a mature or emerging network or pre-growth network and follow the corresponding procedure below.

Withdrawal attempts for mature and emerging assets

With mature and emerging assets, you can retrieve the following additional information, if available:

- Direct exposure information

- Alerts specific to the withdrawal attempt

- Chainalysis Address Identifications

To retrieve additional information about the withdrawal attempt:

- Call the

GET /v2/withdrawal-attempts/{externalId}/exposuresendpoint to retrieve any counterparty exposure information. - Call the

GET /v2/withdrawal-attempts/{externalId}/alertsendpoint to retrieve any available alerts specific to this counterparty. - Call the

GET /v2/withdrawal-attempts/{externalId}/high-risk-addressesendpoint to check if the counterparty has any Chainalysis Address Identifications. - After successfully processing a user’s withdrawal, call the

POST /v2/users/{userId}/transfersendpoint and indicate the"direction"as"SENT"to register the transfer for ongoing monitoring.

Withdrawal attempts for pre-growth assets

With pre-growth assets, you can retrieve the following additional information, if available:

- Alerts specific to the withdrawal attempt

- Chainalysis Address Identifications

- Network Identifications - learn more about Network Identifications here.

To retrieve additional information about the withdrawal attempt:

- Call the

GET /v2/withdrawal-attempts/{externalId}/alertsendpoint to retrieve any alerts specific to the counterparty. - Call the

GET /v2/withdrawal-attempts/{externalId}/high-risk-addressesendpoint to check if the counterparty has any Chainalysis Address Identifications. - Call the

GET /v2/withdrawal-attempts/{externalId}/network-identificationsendpoint to retrieve the counterparty name of any asset and transaction hash matches. - After successfully processing a user’s withdrawal, call the

POST /v2/users/{userId}/transfersendpoint and indicate the"direction"as"SENT"to register the transfer for ongoing monitoring.

Retrieving alerts for transaction monitoring

After you've decided whether to credit a user's funds or process a user's withdrawal attempt, KYT automatically monitors the transaction and generates alerts according to your Alert Rules. You can retrieve those alerts with the GET /v1/alerts endpoint.

If you call the endpoint without any query parameters, you will retrieve all of the alerts within your organization. To retrieve specific alerts, you can filter or sort with various query parameters.

Use the following query parameters to filter the alerts you wish to retrieve:

asset- the asset used in the transaction.userId- the user's unique identifier as defined in the transfer registration.level- the severity of the alert, for example,SEVERE,HIGH,MEDIUM, orLOW.createdAt_lte- the timestamp less than or equal to when the alert generated.createdAt_gte- the timestamp greater than or equal to when the alert generated.alertStatusCreatedAt_lte- the timestamp less than or equal to when the most recent alert status was created.alertStatusCreatedAt_gte- the timestamp greater than or equal to when the most recent alert status was created.

Use the sort parameter with one of the following items to sort the order in which you retrieve alerts:

timestamp- the blockchain date of the transfer that caused the alert.createdAt- the date the alert generated.alertStatusCreatedAt- the date the alert status was last updated.level- The severity of the alert, for example,SEVERE,HIGH,MEDIUM, orLOW.alertAmountUsd- The amount of the transfer that triggered the alert.

After choosing the item you wish to sort by, you must add a URL encoded space character and indicate the order as either ascending (asc) or descending (desc). For example, sort=createdAt%20asc.

Retrieving high severity alerts for a specified user

You can specify a combination of these query parameters to retrieve a specific result. As an example, to retrieve only HIGH severity alerts of a specific userId generated after a particular timestamp (createdAt_gte), call GET /v1/alerts?level=HIGH&userId=user001&createdAt_gte=2020-01-06

This will return all high severity alerts for user001 after January 6th, 2020. Notice the timestamp includes only the date. You can filter even further with the inclusion of time to retrieve alerts down to the microsecond: &createdAt_gte=2020-01-06T12:42:14.124Z

If you want to sort the alerts by their creation date, add sort=createdAt%20asc as a query parameter.

Retrieving severe alerts for a specified date range

You can use both createdAt_gte andcreatedAt_lte to retrieve alerts for a determined time period. As an example, to retrieve only SEVERE alerts within your organization between the hours of 1:00AM and 2:00AM, call GET /v1/alerts?level=SEVERE&createdAt_gte=2021-05-01T00:00:00.00Z&createdAt_lte=2021-05-07T00:00:00.00Z

You can adjust these parameters to then retrieve all SEVERE alerts between the hours of 2:00AM and 3:00AM, so on and so forth. Alternatively, you can discard the time information (T00:00:00Z) from the query parameters altogether to retrieve alerts for certain days, weeks, or even months. The level of frequency you choose to call these endpoints depends on your organizational needs.

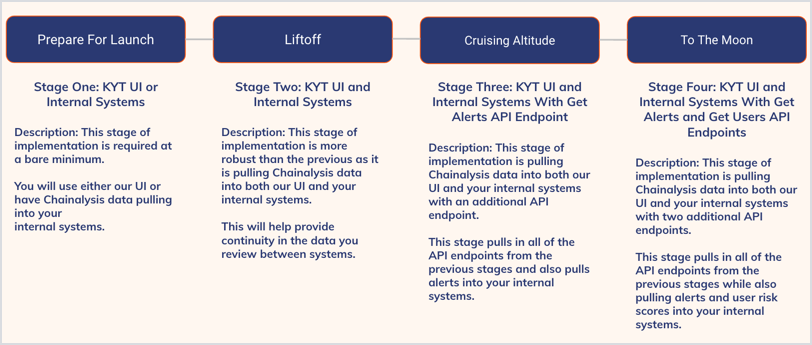

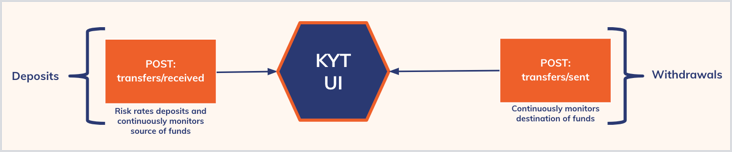

Legacy implementation

Suggested implementations of the Chainalysis KYT API are detailed below, increasing in complexity and the amount of functionality you will receive. You can choose to work entirely in the KYT user interface (UI), or build a response within your own internal systems based on feedback and analysis from Chainalysis.

Building the analysis and data from the KYT API into your internal system provides robust functionality and gives you a more comprehensive picture of your risk. We suggest reviewing example compliance workflows to help assess which implementation best meets your needs.

At minimum, the two major endpoints required for integration are: /transfers/sent and /transfers/received. For basic functionality, you must execute at least those two requests.

Overview

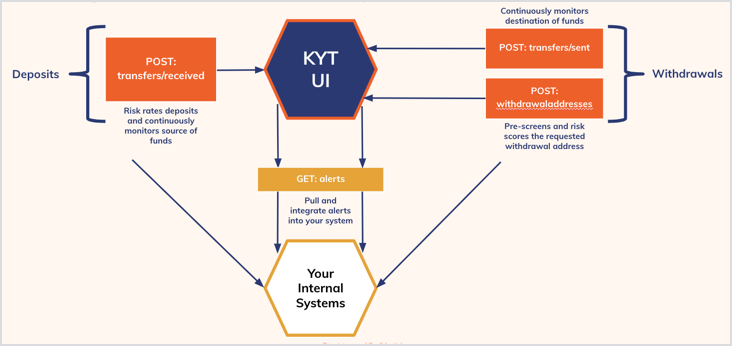

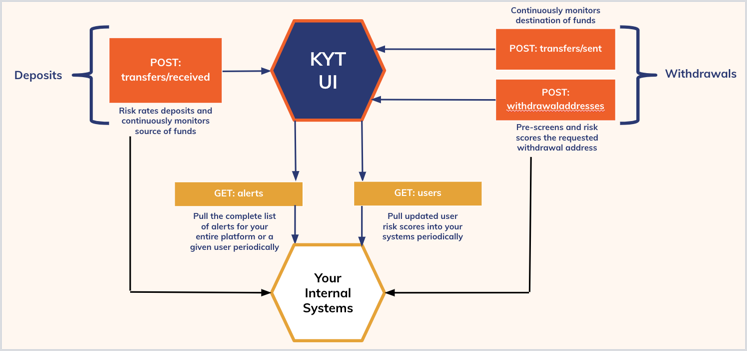

The following graphic serves as a preview and comparison for the various KYT implementations. The implementations are described in detail in the sections below, including benefits and drawbacks as well as required endpoints for each.

To help you visualize a compliance workflow using data from the KYT API, here is a basic KYT implementation.

Prepare for launch 1

KYT UI only

This is the minimum implementation and keeps all data and functionality entirely within Chainalysis’s environment. It does not pull data into your internal systems.

You will receive the full functionality of the KYT UI, but you will not have the ability to automate actions on transfers within your internal systems based on the information from KYT.

This implementation requires registering /transfers/sent and /transfers/received. /depositaddresses is optional.

- Register a received transfer Registers a received transfer to a user and deposit address at your organization. The API response will contain a risk rating (high/low/unknown) of the counterparty that sent the transfer. If the cluster has been identified, the entity name will also be provided.

POST /users/{userId}/transfers/received

- Register a sent transfer

Registers a completed outgoing transfer from a user at your organization to another entity. Usually this is called after the

/withdrawaladdressesendpoint that pre-screens a counterparty before allowing the transfer to proceed.

POST /users/{userId}/transfers/sent

- Optional: Register deposit addresses In the future, KYT will be able to detect deposits (but not withdrawals) based on the deposit address. While this endpoint is not currently required for monitoring or integration, you can implement it now for use with upcoming functionality.

POST /users/{userId}/depositaddresses

Transactions must be associated with a User ID for user risk score calculation.

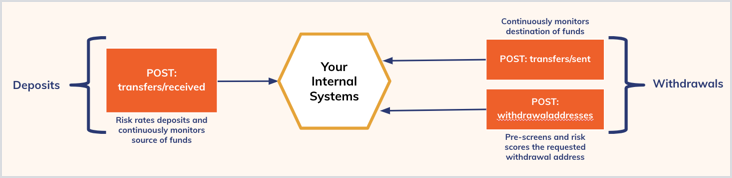

Prepare for launch 2

Internal systems only

While you can work entirely in the Chainalysis environment (see above), this implementation pulls Chainalysis’s data into your own system where you can incorporate KYT into your automated payments workflow.

Integrating Chainalysis’s data into your internal system helps you to get a more comprehensive picture of your risk activity and automate actions on transfers based on Chainalysis data. However, you will be missing out on functionality and convenience by not working within the KYT UI.

This implementation requires registering /transfers/sent and /transfers/received (described above), as well as /withdrawaladdresses.

- Pre-screen a withdrawal address Before allowing a transfer out of your organization, you can check the risk rating of the entity (cluster) associated with the address where the intended funds are going. After receiving a risk rating and information on the potential counterparty, you can determine whether to allow the withdrawal transfer to proceed.

For example, if you want to stop the withdrawal of funds to a sanction or terrorist financing entity, you would use this request.

POST /users/{userId}/withdrawaladdresses

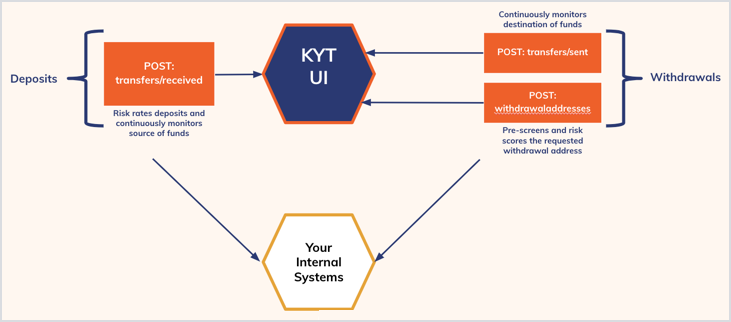

Lift off

KYT UI & Internal systems

This setup is more robust than the previous implementations, as it encompasses both the KYT UI and your internal systems. You will be able to take action on transfers within your system and also have the full functionality of the UI, helping to provide continuity in the data you review between systems.

However, it does not take advantage of all the KYT features, such as alerts.

This implementation requires registering /transfers/sent, /transfers/received, and /withdrawaladdresses (described above).

With this implementation you can:

- Create a URL link from your internal systems to the KYT UI.

- Set flags based on Chainalysis data. For example, a 'high risk' response from

transfers/receivedputs risky deposits up for review by your compliance team. - Pull Chainalysis data into your payment review system. For example, you can include a transfer's risk rate in your payments review queue.

Cruising altitude

KYT UI & Internal systems

This implementation pulls Chainalysis data into both the KYT UI and your internal systems with an additional API endpoint, /alerts.

Cruising Altitude brings the most powerful data that KYT offers - alerts - into your internal system. You can perform the actions mentioned above (setting flags or pulling the risk score into payments queues) with the benefit that the data you are using is the most robust. This implementation can also help you match the alerts workflows that your compliance team is likely doing in the UI.

This implementation requires registering /transfers/sent, /transfers/received, /withdrawaladdresses (described above), as well as /alerts.

- Get alerts

Retrieves the details of all of your alerts in KYT and pulls those alerts into your own system. Alerts allow you to identify risky transfers on your platform.

GET /alerts

To the moon

KYT UI & Internal systems

This implementation pulls Chainalysis data into both the KYT UI and your internal systems. It builds on the implementations above with an additional endpoint, /users. GET /users provides you with a user risk score for each of your users.

Alerts and user risk score are two powerful analytic metrics that are provided by the KYT API for assessing your risk activity. User risk scores allow you to perform user-level automated review in your internal system on top of the transfer-level review from above.

This implementation requires registering /transfers/sent, /transfers/received, /withdrawaladdresses, /alerts (described above), as well as /users.

- Get users Retrieves details, including the user risk score (as LOW, MEDIUM, HIGH, or SEVERE), on all registered users in your system. You can use the user risk score to manually review or hold transfers made by high-risk users.

GET /users

Compliance workflows

Below are example compliance workflows using KYT’s most powerful risk assessment features to help you formulate policies and procedures based on the information provided by the KYT and Reactor UIs, and the KYT API.

The workflows below focus on three KYT features that help you prioritize risk activity: alerts, user risk score, and counterparty screen:

- Alerts in KYT are generated whenever a transfer involves a risky counterparty and/or crosses a value threshold. A single transfer can trigger multiple alerts.

- The user risk score helps you to identify high-risk users in your organization.

- Deposit and withdrawal requests registered with Chainalysis returns a counterparty risk rating as highRisk/unknown/lowRisk. If highRisk or lowRisk (if the counterparty is known), the Category (darknet market or exchange) and Name (Hydra Marketplace, Kraken.com) will also be returned.

We suggest using alerts as a notification and starting point for review and interacting with user risk profiles. The KYT Dashboard in the UI gives you a high-level overview of your risk for managerial and organizational reporting/monitoring, while alerts is where an analyst will spend most of their time.

Deposit workflow

This is a suggested workflow for funds that are received by a user at your organization. Note that in most cases, you cannot stop an incoming transfer from occurring. However, KYT helps you to detect the risk associated with the transfer and take appropriate compliance action. For example, if a user receives funds directly from a cluster categorized as child abuse material, you can decide how you want to react (e.g. freeze funds, investigate, and likely file a SAR).

1. Register the transfer When funds arrive at a user address, POST the received transfer.

2. Check alerts and/or user risk score

You can check alerts:

- Via the UI: search for the transaction ID in the search bar at the top of the KYT UI. If an alert was raised on the transfer, it will appear in the transfer details panel.

- Via the API: by using the

GET /alerts. Alert levels are returned as SEVERE, HIGH, MEDIUM, and LOW.

We suggest checking alerts first to assess risky transfer activity and then moving to review all alerts at the user level by clicking the unique URL for the user. You can also check the user’s risk score to see if the user is high risk.

You can check user risk score:

- Via the UI: search for the user’s ID in the search bar at the top of the KYT UI. The risk score is found on the user information page as Severe, High, Medium, or Low.

- Via the API: by using the

riskScoreproperty inGET /users/{userid}.

3. Take action

Take action on the received funds. For example, you can hold the transfer and submit it to be manually reviewed, immediately freeze the user’s funds, or deem the transfer non-risky.

Chainalysis continually monitors for risk on each registered transfer.

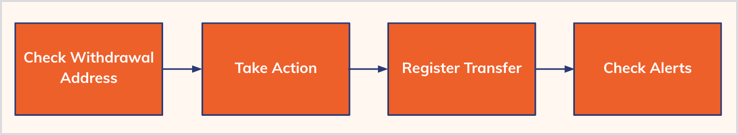

Withdrawal workflow

This is a suggested workflow for funds that are being sent by a user at your organization. Unlike deposits, you can stop a risky withdrawal from occurring if at the time of the withdrawal, the withdrawal address has been identified as risky. For example, if a user requests a withdrawal towards a sanctioned address, you can decide to block this request.

1. Check Withdrawal Address

The withdrawal prescreen is used in real-time to pre-screen for counterparty risk. When a user requests a withdrawal, register the withdrawal address by making a POST request to the /withdrawaladdresses endpoint. For known counterparties, a risk rating of high risk or low risk will be provided, as well as the counterparty’s name.

Note that it is common to withdraw to a previously unknown address, so the majority of addresses you check may have an unknown rating.

2. Take action

After performing the withdrawal address counterparty screen, take action on the pending transfer. You may choose to block a transfer that is high risk, or flag it for further review.

3. Register the transfer

Always be sure to register an approved withdrawal as a sent transfer upon completion so that your team can access it in the compliance dashboard and KYT can monitor the transfer. Note that KYT updates risk for transfers on an ongoing basis, but does not update withdrawal addresses. The latter will always return the rating when the address was first screened.

As with deposits, Chainalysis monitors sent transfers for you over time.

4. Check alerts

Look at the user information page in the UI for the user's associated withdrawal addresses and alerts. Alerts that have a sent direction mean that the withdrawal was approved.

Example compliance actions

Here are some examples of compliance actions taken by our customers when following the workflows above:

“If we notice a withdrawal request towards terrorism financing, we will show the user the withdrawal request is processing and call our Financial Intelligence Unit hotline to ask if they want us to block the transaction (and likely tip off the user) or allow it to occur (for ongoing monitoring).”

“If we detect a direct deposit from a darknet market, we will silently freeze the account. Typically we will file a SAR report, offboard the user, and allow the user to withdraw their funds via a fiat conversion to their bank account.”

“If we detect a pattern of risky (in)direct darknet market transactions, we will freeze the account. Typically we will file a SAR report, offboard the user, and allow the user to withdraw their cryptocurrency.”

“Transfers to mixing services are prohibited on our platform. If we see a withdrawal request to a mixer, we flag the transfer for review and ask the user to explain the purpose of the withdrawal.”

Internal systems

Note that instead of performing these checks manually, you can automate the process by building a response within your own internal systems. See the suggested implementations above for more information. The benefits of automating compliance include:

- Prevent successful money laundering.

- Proactively reduce risky activity from occurring.

- Nudge users toward correct behavior.

- More efficient and meaningful time usage of your compliance team.

Scripts

Dismiss indirect alerts with custom criteria

This article guides you through the creation of a Python script that dismisses indirect transfer alerts based on a specified categoryId and percentage of transfer threshold. For instance, you can dismiss all indirect sanctioned entity alerts where the percentage of the transfer is less than 1%.

After following the tutorial, you'll have a script that can be customized to fit your organization's needs for alert dismissal processes. The script does the following:

- Ingests your chosen category (

categoryId) and dismissal thresholds. - Retrieves alert identifiers by calling the

GET /v1/alerts/endpoint. - Dismisses any alerts that meet your dismissal criteria by calling the

POST /v1/alerts/{alertIdentifier}/statusesendpoint with the stored alert identifiers.

Before you start

Before beginning this tutorial, make sure you have:

- Installed Python 3.6 or a newer version.

- A valid KYT API key.

Build the script

Import necessary libraries

To build this script, you will need the following libraries:

argparse: to get variables from the command-line tool.requests: to make API requests from Python.json: to handle sent and received JSON data.

Add the following code to import these libraries:

import argparse

import requests

import json

Set command-line variables

By using command-line arguments, you can easily set variables that can be changed depending on how you run the script. The script has arguments for the following variables:

- API key.

- Percentage of transfer dismissal threshold.

- Entity categoryId for alert dismissal.

userIdto narrow the scope of alerts to a specific user (optional).

Add the following code to define these arguments in your script:

# Define command-line arguments for the API Key, transferReference, and userId values

parser = argparse.ArgumentParser()

parser.add_argument("-k", "--key", required=True, help="The API key to use for authentication.")

parser.add_argument("-p", "--percentage", required=True, help="The dismissal threshold percentage.")

parser.add_argument("-c", "--categoryId", required=True, help="The categoryID of the category you want to dismiss alerts for.")

parser.add_argument("-u", "--userId", required=False, help="The userId you want to dismiss alerts for.")

# Parse the command-line arguments for use later on

args = parser.parse_args()

Define the alerts endpoint

Both API endpoints in this script have the same base URL and require similar headers. Additionally, you will need to set the query parameters for the GET request and the request body for the POST request. For dismissing alerts, include values for the status and comment properties.

Add the following code to your script to define the API URL, headers, query parameters, and request body:

# Set the URL, query parameters, and API headers

url = "https://api.chainalysis.com/api/kyt/v1/alerts"

params = {

"limit": 20000, # Adjust this property to retrieve more alerts per GET request.

"offset": 0, # Change the offset to retrieve another batch of alerts.

# "createdAt_gte": "" # Enable this property to set a timestamp to only retrieve alerts that were generated after the specified timestamp.

# "userId": "" # Enable this property to retrieve alerts for a given userId.

}

headers = {

"token": args.key,

"Content-Type": "application/json",

"accept": "application/json",

}

# Set the request body for the POST request, create a counter for dismissed alerts, provide a print statement

dismissed_status = json.dumps({

"status": "Dismissed",

"comment": f"Dismissed because the alert was indirect with an alerted amount less than {args.percentage}% of the transfer.",

})

Retrieve alerts

Now that the API has been defined, you can call the GET endpoint to retrieve alerts. Store the alerts for later use and count the number of alerts retrieved.

Add the following code to your script to retrieve, store, and count alerts:

# Make the request, convert the JSON data into a Python dictionary, print the amount of retrieved alerts

response = requests.get(url, params=params, headers=headers)

if response.status_code != 200:

print(f"Error: An error occurred. HTTP status code {response.status_code} returned")

exit(1)

alerts_json = response.json()

print("Number of retrieved transfer alerts:", len(alerts_json["data"]))

Define the dismissal criteria

Now that alerts have been retrieved, filter through them so that only those that meet your criteria are processed. The script uses the arguments you defined earlier to provide flexibility.

Also, include a print statement to provide feedback in your command-line tool while dismissing alerts.

Add the following code to your script:

num_dismissed_alerts = 0

print('Dimissing alerts...')

# Iterate over each alert you retrieved (from the alerts_json["data"] array)

for item in alerts_json["data"]:

# Check if the alert meets the specified criteria

if (

item["alertStatus"] != "Dismissed"

and item["exposureType"] == "INDIRECT"

and item["alertAmountUsd"] > 0

and item["transferredValuePercentage"] < float(args.percentage)

and item["categoryId"] == args.categoryId

):

Action filtered alerts

Now that the alerts have been filtered to only those that meet your criteria, use the POST endpoint and the alertIdentifier property to take action on them.

Add the following code to your script, making sure to maintain proper indentation:

# Set the path_param variable to the value of the alertIdentifier

path_param = item["alertIdentifier"]

# Make the POST request using the path_param variable as the path parameter

response2 = requests.post(f"{url}/{path_param}/statuses", headers=headers, data=dismissed_status)

if response2.status_code == 200:

print(f"Successfully dismissed alert with alertIdentifier: {path_param}")

num_dismissed_alerts += 1 # Counts how many alerts meet above critera/are dismissed

else:

print(f"Error: An error occured. HTTP status code {response2.status_code} returned.")

# Final print statement for total dismissed alerts

print(f"Number of alerts with a categoryId of \"{args.categoryId}\" dismissed: {num_dismissed_alerts}")

Note that the code above includes print statements to give you feedback and help you track progress in your command-line tool.

Put the script together

Below is a completed script created from all the parts above:

import argparse

import requests

import json

# Define command-line arguments for the API Key, transferReference, and userId values

parser = argparse.ArgumentParser()

parser.add_argument("-k", "--key", required=True, help="The API key to use for authentication.")

parser.add_argument("-p", "--percentage", required=True, help="The dismissal threshold percentage.")

parser.add_argument("-c", "--categoryId", required=True, help="The categoryID of the category you want to dismiss alerts for.")

parser.add_argument("-u", "--userId", required=False, help="The userId you want to dismiss alerts for.")

# Parse the command-line arguments for use later on

args = parser.parse_args()

# Set the URL, query parameters, and API headers

url = "https://api.chainalysis.com/api/kyt/v1/alerts"

params = {

"limit": 20000, # Adjust this property to retrieve more alerts per GET request.

"offset": 0, # Change the offset to retrieve another batch of alerts.

# "createdAt_gte": "" # Enable this property to set a timestamp to only retrieve alerts that were generated after the specified timestamp.

# "userId": "" # Enable this property to retrieve alerts for a given userId.

}

headers = {

"token": args.key,

"Content-Type": "application/json",

"accept": "application/json",

}

# Set the request body for the POST request, create a counter for dismissed alerts, provide a print statement

dismissed_status = json.dumps({

"status": "Dismissed",

"comment": f"Dismissed because the alert was indirect with an alerted amount less than {args.percentage}% of the transfer.",

})

# Make the request, convert the JSON data into a Python dictionary, print the amount of retrieved alerts

response = requests.get(url, params=params, headers=headers)

if response.status_code != 200:

print(f"Error: An error occurred. HTTP status code {response.status_code} returned")

exit(1)

alerts_json = response.json()

print("Number of retrieved transfer alerts:", len(alerts_json["data"]))

num_dismissed_alerts = 0

print('Dimissing alerts...')

# Iterate over each alert you retrieved (from the alerts_json["data"] array)

for item in alerts_json["data"]:

# Check if the alert meets the specified criteria

if (

item["alertStatus"] != "Dismissed"

and item["exposureType"] == "INDIRECT"

and item["alertAmountUsd"] > 0

and item["transferredValuePercentage"] < float(args.percentage)

and item["categoryId"] == args.categoryId

):

# Set the path_param variable to the value of the alertIdentifier

path_param = item["alertIdentifier"]

# Make the POST request using the path_param variable as the path parameter

response2 = requests.post(f"{url}/{path_param}/statuses", headers=headers, data=dismissed_status)

if response2.status_code == 200:

print(f"Successfully dismissed alert with alertIdentifier: {path_param}")

num_dismissed_alerts += 1 # Counts how many alerts meet above critera/are dismissed

else:

print(f"Error: An error occured. HTTP status code {response2.status_code} returned.")

# Final print statement for total dismissed alerts

print(f"Number of alerts with a categoryId of \"{args.categoryId}\" dismissed: {num_dismissed_alerts}")

Use the script

Once you've finished your script, run it in your command-line tool with the following command:

python3 dismiss.py -k API_KEY -p DISMISSAL_PERCENTAGE -c ENTITY_CATEGORY_ID

For example, you can dismiss all indirect sanctioned entity alerts whose percentage of transfer is less than one (<1%) with the following command:

python3 dissmiss.py -k 123abc -p 1 -c sanctioned entity

If successful, you should see output in your command-line tool similar to the following:

Number of retrieved transfer alerts: 83

Dimissing alerts...

Successfully dismissed sanctioned entity alert with alertIdentifier: 0f1824fa-7ca8-11ed-a464-a77051ec428e

Successfully dismissed sanctioned entity alert with alertIdentifier: 0f180628-7ca8-11ed-a464-27d19535f166

Successfully dismissed sanctioned entity alert with alertIdentifier: 0f17a0de-7ca8-11ed-a464-f7dca967b16b

Successfully dismissed sanctioned entity alert with alertIdentifier: 0f175e26-7ca8-11ed-a464-bbb6707239e0

Successfully dismissed sanctioned entity alert with alertIdentifier: 0ef823da-7ca8-11ed-a464-b7f5b6483c01

Successfully dismissed sanctioned entity alert with alertIdentifier: 0ef8029c-7ca8-11ed-a464-7bf5ea6bd720

Successfully dismissed sanctioned entity alert with alertIdentifier: 0dd079ee-7ca8-11ed-a464-c3f361e0ebe3

Successfully dismissed sanctioned entity alert with alertIdentifier: 0dd020c0-7ca8-11ed-a464-17995b2d3f2c

Successfully dismissed sanctioned entity alert with alertIdentifier: 0c17221a-7ca8-11ed-a464-8b5786357333

Successfully dismissed sanctioned entity alert with alertIdentifier: 0bfdcdd8-7ca8-11ed-a464-877dcb602d21

Number of sanctioned entity alerts dismissed: 10

Customizations

This script can be tailored to meet the needs of your organization. Here are a few examples of how you could customize it:

- Filter alerts based on additional variables by using additional query parameters.

- Programmatically dismiss alerts for users in specific jurisdictions, such as those where gambling is legal.

- Programmatically dismiss alerts for offboarded users.

- Programmatically dismiss alerts for transfers older than a year.

To implement these customizations, you may need to join with your internal data to determine the status, jurisdiction, or other characteristics of a user.

Retrieve behavioral alerts by date

In this tutorial, you will create a Python script to retrieve and filter behavioral alerts from the GET /v1/alerts/ endpoint. With the script, you can retrieve all behavioral alerts based on a specified date (day, week, or month) and an optional userId. Since behavioral alerts are not tied to an individual transfer, using dates can be a helpful way to retrieve and organize them.

The script does the following:

- Ingests your chosen date, which can be an individual day, week, or month.

- Translates your inputted date into variables that can filter alerts.

- Retrieves and filters behavioral alerts according to your input.

- Saves your alerts to a CSV and prints them to your command-line interface.

Prerequisites

Before starting, please ensure you have the following:

- Python and pip installed on your system (preferably Python 3.6 or later).

- A code editor or IDE of your choice.

- Basic knowledge of Python and web scraping.

Install required libraries

To begin, install the necessary libraries for your script. Open your command-line interface and run the following commands:

pip install requests python-dateutil

Note that the other libraries used in the script below are included in Python's standard library and don't need installation.

Import the required libraries

In your code editor, create a new Python file called behavioral_alerts.py. Start by importing the required libraries:

import argparse

import csv

from datetime import datetime, timedelta, timezone

from dateutil.parser import isoparse

from requests import get

import json

Define helper functions

Your script will contain five helper functions to help organize different actions. The section of the tutorial will walk you through creating your helper functions.

Parse and format date range

These functions are responsible for handling date inputs and converting them into suitable formats for querying the API:

get_date_range(date_str): takes a date string in the ISO 8601 format as an argument and returns a tuple containing the start and end dates for the query. It handles day, week, and month inputs, which are useful for specifying the date range when making API requests.get_requested_window_size(date_str): takes a date string in the ISO 8601 format as an argument and returns the requested window size as a string (1 day,7 days,1 mon). This value is used to filter the response by thewindowSizeproperty.

Add the following code to your file:

# Function to parse the date string and return the start and end dates for the query

def get_date_range(date_str):

date = isoparse(date_str)

if "W" in date_str:

year, week = map(int, date_str.split("-W"))

start_date = datetime.strptime(f"{year}-W{week:02d}-1", "%Y-W%W-%w")

end_date = start_date + timedelta(days=6)

elif date_str.count('-') == 1:

year, month = date.year, date.month

start_date = datetime(year, month, 1)

end_date = (start_date + timedelta(days=32)).replace(day=1) - timedelta(days=1)

else:

start_date = date

end_date = start_date + timedelta(days=1)

return start_date, end_date

# Function to determine the requested window size based on the date string

def get_requested_window_size(date_str):

date = isoparse(date_str)

if "W" in date_str:

return "7 days"

elif date_str.count('-') == 1:

return "1 mon"

else:

return "1 day"

Filter alerts

These functions help filter the retrieved alerts based on the requested window size and date range, ensuring that only relevant data is returned:

window_size_matches(alert, requested_window_size, start_date, end_date): checks if the window size of a given alert matches the requestedwindowSizeand if the alert'speriod_startis within the requested date range. It returnsTrueif both conditions are met andFalseotherwise.filter_alerts(alerts, requested_window_size, start_date, end_date): takes a list of alerts and filters them based on the requested windows size (windowSize) and date range (period). It returns a new list containing only the alerts that meet the filtering criteria.

Add the following code to your file:

# Function to check if the window size of the alert matches the requested window size

def window_size_matches(alert, requested_window_size, start_date, end_date):

# Parse the period_start and period_end from the alert

period_start, period_end = [datetime.strptime(x.replace('+00', '+0000'), '%Y-%m-%d %H:%M:%S%z') for x in alert['period'][2:-2].split('","')]

# Set timezone for start_date and end_date

start_date = start_date.replace(tzinfo=timezone(timedelta(0)))

end_date = end_date.replace(tzinfo=timezone(timedelta(0)))

# Check if the period_start of the alert is within the requested date range

if not (start_date <= period_start < end_date):

return False

# Check if the window size of the alert matches the requested window size

if alert['windowSize'] != requested_window_size:

return False

return True

# Function to filter alerts based on the requested window size and date range

def filter_alerts(alerts, requested_window_size, start_date, end_date):

filtered_alerts = []

for alert in alerts:

if window_size_matches(alert, requested_window_size, start_date, end_date):

filtered_alerts.append(alert)

return filtered_alerts

Interact with the API

The final get_alerts(api_key, start_date, end_date, userId=None) function sends a GET request to the API with the specified query parameters (including optional userId) and returns the retrieved alerts as a list of dictionaries. The function raises an exception if the API response status code is not 200.

Add the following code to your file:

# Function to GET alerts from the API

def get_alerts(api_key, start_date, end_date, userId=None):

# Define the API endpoint and headers

url = 'https://api.chainalysis.com/api/kyt/v1/alerts/'

headers = {

"token": api_key,

"Content-Type": "application/json",

"accept": "application/json"

}

# Set timezone for start_date and end_date

start_date = start_date.replace(tzinfo=timezone.utc)

end_date = end_date.replace(tzinfo=timezone.utc)

# Define the query parameters for the API request

params = {

"createdAt_gte": start_date.isoformat(),

"createdAt_lte": end_date.isoformat(),

"alertType": "BEHAVIORAL",

"limit": 20000,

}

# Adds userId query param if you submit as an argument

if userId:

params["userId"] = userId

# Send the API request and check for any errors

response = get(url, headers=headers, params=params)

if response.status_code != 200:

raise Exception(f"Error: {response.status_code} - {response.text}")

# Return the 'data' field from the API response

return response.json()['data']

Define the main function

The main function is responsible for coordinating the helper functions, handling command-line arguments, retrieving alerts then filtering alerts, and exporting the results to a CSV file. The rest of this tutorial guides you through the creation of the main function.

Handle command-line arguments

In this part of the script, use the argparse library to define and parse the command-line arguments. This allows users to specify the required API key, date, and an optional 1 when running the script. Add the following code to your file:

if __name__ == "__main__":

# Parse command-line arguments

parser = argparse.ArgumentParser(description='Retrieve behavioral alerts')

parser.add_argument('-k', '--key', required=True, help='API key for authentication')

parser.add_argument('-d', '--date', required=True, help='Date for which to retrieve alerts (day, week, or month) in ISO 8601 format')

parser.add_argument('-u', '--userId', required=False, help='Optional userID query param to filter alerts by')

args = parser.parse_args()

Calculate date range and requested window size

Using the parsed command-line arguments, call the helper functions get_date_range() and get_requested_window_size() to calculate the date range and the requested window size for the query.

Add the following code to your main function, being sure to maintain indentation:

start_date, end_date = get_date_range(args.date)

requested_window_size = get_requested_window_size(args.date)

Retrieve and filter alerts

Next, call the get_alerts() function to retrieve alerts from the API for the specified date range and the optional userId. Then, use the filter_alerts() function to filter the alerts based on the requested window size and date range.

Add the following code to your main function, being sure to maintain indentation:

alerts = get_alerts(args.key, start_date, end_date, args.userId)

filtered_alerts = filter_alerts(alerts, requested_window_size, start_date, end_date)

Export results to a CSV file

Finally, loop through the filtered alerts and write them to a CSV file using the csv library. Also, you can print some relevant information about each alert, as well as the total number of retrieved alerts.

Add the following code to your main function, being sure to maintain indentation:

with open('filtered_alerts.csv', mode='w', newline='', encoding='utf-8') as csv_file:

if filtered_alerts:

fieldnames = list(filtered_alerts[0].keys())

writer = csv.DictWriter(csv_file, fieldnames=fieldnames)

writer.writeheader()

for alert in filtered_alerts:

# Write the alert to the CSV file

writer.writerow(alert)

# Print select properties

print(f"Alert ID: {alert['alertIdentifier']}")

print(f"Alert Type: {alert['alertType']}")

print(f"Level: {alert['level']}")

print(f"Window Size: {alert['windowSize']}")

print(f"Period: {alert['period']}")

print(f"User ID: {alert['userId']}")

print(f"Created at: {alert['createdAt']}")

print()

# Print entire JSON

#print(json.dumps(filtered_alerts, indent=2)) # Commented

alert_count += 1

print(f"Total retrieved alerts: {alert_count}")

Execute the script

To execute the script:

- Save the provided Python script as behavioral_alerts.py in your working directory.

- Open your command-line tool and navigate to the directory containing the script.

- Run the script with the required arguments:

-kor--key: your API key for authentication.-dor--date: the date for which to retrieve alerts in ISO 8601 format. Use the format:YYYY-MM-DDfor a specific day, like 2023-01-01.YYYY-MMfor an entire month, like 2022-12.YYYY-Wwwfor a given week, wherewwis the week number, like 2022-W42.

-uor--userId(optional): A KYT user ID to filter alerts by.

Example usage:

python3 behavioral_alerts.py -k API_KEY -d 2023-W01 -u USER_ID

The script will send a request to the GET /v1/alerts/ endpoint, retrieve alerts for the specified date range, and filter them based on the requested window size and date range. The filtered alerts will be saved as a CSV file named filtered_alerts.csv in the same directory as the script, and the alert information will also be printed to the console. The total number of retrieved alerts will be displayed at the end of the script's execution.

Usage

You can implement the logic in this script to run daily for the previous day, weekly for previous week, and monthly for previous month to get newly generated behavioral alerts. What sets this logic apart from just using createdAt is the included client-side filtering. In other words, depending on the type of date you enter, it'll filter according to corresponding windowSize and period JSON response properties. Once new alerts are retrieved, you can then action the userId accordingly.

Retrieve alerts as Slack notifications

This article guides you through a Python script that retrieves KYT alerts as Slack notifications. You can use the script as-is or customize it (for example, to create notifications for all alerts or only alerts that meet a target criteria).

The script is especially beneficial for team members in your organization who may not be familiar with the API or have time to monitor KYT's dashboard constantly.

The Python script outlined here automates the following things:

- Checks for alerts generated since the last time you ran the script.

- Sends a Slack notification and link for any newly generated alerts.

- Saves the timestamp when you last ran the script for future reference.

Before you start

Before you continue this tutorial, please ensure you meet the following prerequisites:

- Install Python 3.6 or greater.

- Have a valid Chainalysis KYT API Key.

- Have a Slack workspace that can receive notifications.

Build the script

Import the necessary libraries

At its core, the KYT API is a client that makes HTTP requests and returns JSON. To interact with the API successfully, import the following libraries:

datetime: provides the time in the appropriate format.requests: allows Python to make API requests (to call the KYT API).argparse: allows you to input variables on the command line.json: parses the returned JSON responses.

Import each of these libraries:

from datetime import datetime

import requests

import argparse

import json

Enable multiple users to access the script

argparse allows you to input arguments that the script can use as variables. In this case, your API key that points toward your KYT organization and authenticates your requests. If multiple team members use the script, each can input their KYT API key in the command line.

The following code creates an argument that asks for an API key upon running:

parser = argparse.ArgumentParser(description="Check for new alerts")

parser.add_argument("-k", "--key", required=True,

help="Chainalysis API Key",metavar="key")

args = parser.parse_args()

Retrieve alerts

This function checks for KYT alerts generated since that script was last run. It calls the GET /v1/alerts/ endpoint with the query parameter createdAt_gte to avoid creating notifications for old alerts.

As an example, GET https://api.chainalysis.com/api/kyt/v1/alerts/?createdAt_gte=2021-06-01T12:30:00 retrieves all alerts generated after 12:30PM UTC on June 1st, 2021.

The following code creates the function get_alerts, which only retrieves alerts generated since the last time the script was run.

def get_alerts()

"""Retrieve alerts from KYT that were generated after the last time the script was run."""

base = "https://api.chainalysis.com"

h = {

'token': args.key,

'Content-Type': 'application/json',

'accept': 'application/json'

}

url = f"{base}/api/kyt/v1/alerts/?createdAt_get={last_runtime}"

response = requests.get(url,headers=h)

return response.json()

Send Slack notifications

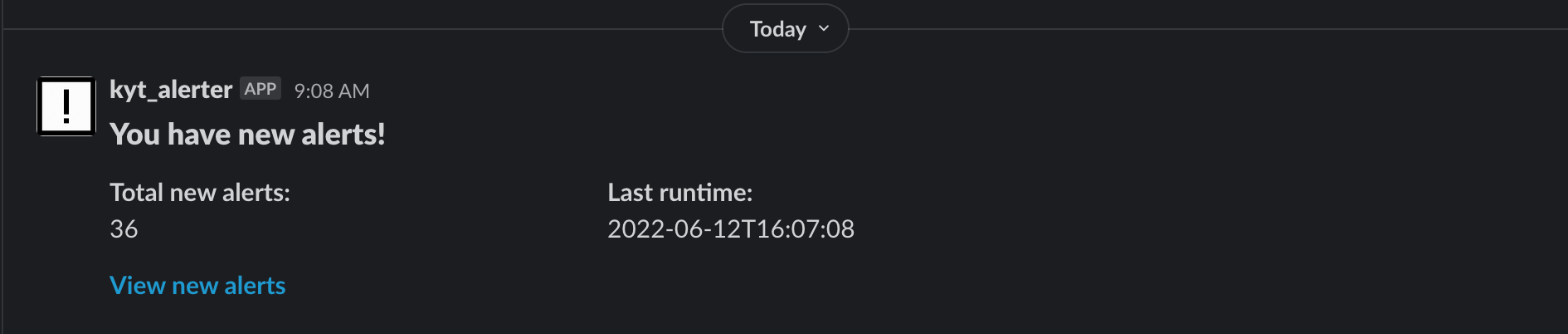

Once you've retrieved your newly created alerts from KYT, you can use Slack's webhook to deliver them in a notification to your Slack workspace. To learn more about creating a webhook URL for your Slack workspace, see Slack's documentation Sending messages using Incoming Webhooks. Once you have this URL, replace it in the code below as the value for url.

The following code creates the function send_slack_message, which sends you a Slack notification with the following information:

- a notification title (for example, "You have new alerts!").

- the total number of new alerts.

- the timestamp of the last runtime.

- a link to view the alerts in KYT's dashboard.

def send_slack_message():

"""Trigger a Slack notification via webhook"""

url = "" # Insert your webhook URL here.

h = {'Content-Type': 'application/json'}

payload = {

"blocks": [

{

"type": "header",

"text": {

"type": "plain_text",

"text": "You have new alerts!"

}

},

{

"type": "section",

fields": [

{

"type": "mrkdwn",

"text": f"*Total new alerts:*\n{total_alerts}"

},

{

"type": "mrkdwn",

"text": f"*Last runtime:*\n{last_runtime[0:19]}"

}

]

},

{

"type": "section",

"fields": [

{

"type": "mrkdwn",

"text": f"<https://kyt.chainalysis.com/alerts?alertStatus=Unreviewed&createdAt_get={last_runtime}|*View new alerts*>"

}

]

}

]

}

response = requests.post(url,headers=h,data=json.dumps(payload))

# print(response.text) # If your webhook is working as expected, this will return 'ok'.

You can customize the payload variable to present different information. This variable was constructed using Slack's Block Kit Builder.

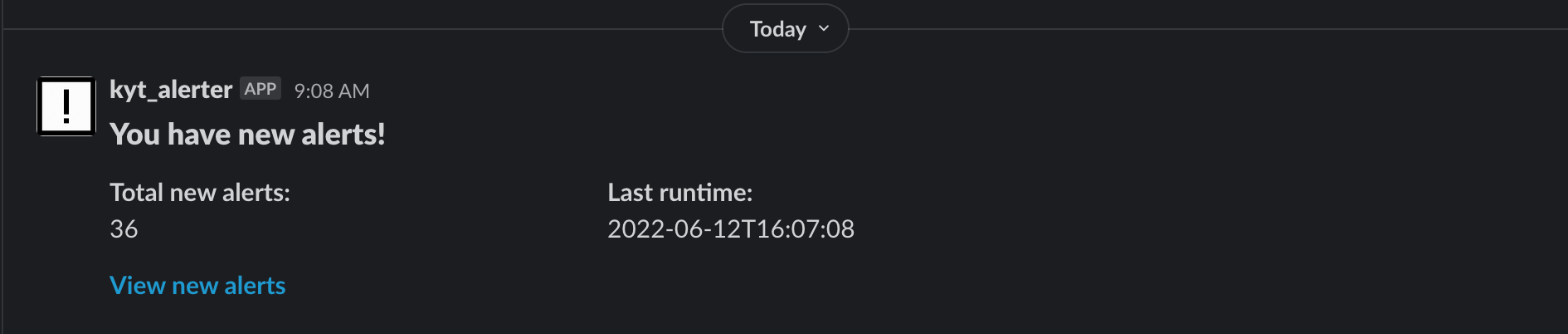

The code and payload above generate a Slack notification that looks like this:

Update your latest run time

After you have retrieved any new alerts, you want to ensure you don't re-retrieve old alerts. To retrieve only newly generated alerts, the code below creates the function update_last_runtime to rewrite the script and set the last_runtime variable as the current time in UTC. The get_alerts function uses the last_runtime variable when checking for alerts.

def update_last_runtime():

"""Modify script to set last_runtime variable to the current time in UTC."""

with open(__file__, 'r') as f:

lines = f.read().split('\n')

# Find which line last_runtime variable resides and modify it to the current time

variable_index = [i for i, e in enumerate(lines) if "last_runtime = " in e]

lines[variable_index[0]] = (f'last_runtime = "{(str(datetime.utcnow())).replace("", "T")}"')

# Update the script to include last_runtime variable as time now in UTC.

with open(__file__, 'w') as f:

f.write('\n'.join(lines))

Putting it together

If you combine the sections above, you can write a template script to send a notification whenever there is more than one newly generated alert:

from datetime import datetime

import requests

import argparse

import sys

import json

parser = argparse.ArgumentParser(description="Check for new alerts")

parser.add_argument(

"-k", "--key", required=True, help="Chainalysis API Key", metavar="key"

)

args = parser.parse_args()

last_runtime = (str(datetime.utcnow())).replace(" ","T")

def get_alerts():

"""Get any alerts from KYT that were generated after the last time the script was run"""

base = "https://api.chainalysis.com"

h = {

"token": args.key,

"Content-Type": "application/json",

"accept": "application/json",

}

url = f"{base}/api/kyt/v1/alerts/?createdAt_gte={last_runtime}"

response = requests.get(url, headers=h)

if response.status_code != 200:

print("Failed to get alerts. Try again. Exiting...")

sys.exit()

return response.json()

def send_slack_message():

"""Trigger a Slack notification via webhook."""

url = "" # keep this secret

h = {"Content-Type": "application/json"}

payload = {

"blocks": [

{"type": "header", "text": {"type": "plain_text", "text": "You have new alerts!"}},

{

"type": "section",

"fields": [

{"type": "mrkdwn", "text": f"*Total new alerts:*\n{total_alerts}"},

{

"type": "mrkdwn",

"text": f"*Last runtime:*\n{last_runtime[0:19]}",

},

],

},

{

"type": "section",

"fields": [

{

"type": "mrkdwn",

"text": f"<https://kyt.chainalysis.com/alerts?alertStatus=Unreviewed&createdAt_gte={last_runtime}|*View new alerts*>",

}

],

},

]

}

response = requests.post(url, headers=h, data=json.dumps(payload))

# print(response.text) <- if your webhook is working as expected, this will return 'ok'

def update_last_runtime():

"""Modify script to set last_runtime variable to current time in UTC"""

with open(__file__, "r") as f:

lines = f.read().split("\n")

# find which line last_runtime variable resides

variable_index = [i for i, e in enumerate(lines) if "last_runtime = " in e]

lines[

variable_index[0]

] = f'last_runtime = "{(str(datetime.utcnow())).replace(" ","T")}"'

# update script to include last_runtime variable as time now in UTC

with open(__file__, "w") as f:

f.write("\n".join(lines))

if __name__ == "__main__":

alerts = get_alerts()

total_alerts = alerts["total"]

if total_alerts > 0:

send_slack_message()

update_last_runtime())

Use the script

After completing your script, follow the steps below to use it:

- Generate an API key here. (If you are unable to, contact support to check your permissions.).

- Using the sections above, build your script (we named ours

alerter.py). - In your CLI, navigate to the directory where your script lives.

Run the following command, being sure to replace

$KEYwith your KYT API key:python3 alerter.py -k $KEY

Congratulations! If successful, you should have received a Slack notification in your workspace detailing new alerts.

Frequency

Run the script at your desired frequency with a utility of choice. For example, cron is a job scheduler that allows you to run scripts (and other things) at fixed times, dates, or intervals. You can use cron to enable workflows that run only Monday-Friday, avoiding weekend notifications, once a month, or on other cadences. For some examples of how to write various scheduling expressions, see crontab guru.

Customizations

There are many ways to customize the type of alerts you retrieve. Depending on your volume of alerts and compliance workflows, you may want to restrict notifications to specific categories, alert severities, or user groups. The following examples below detail some of these possibilities:

Standard

The following code sends a notification whenever there is at least one new alert:

if total_alerts > 0:

send_slack_message()

Severity

The following code only sends notifications when a target alert severity of HIGH or SEVERE is found:

if total_alerts > 0:

for alert in alerts:

severity_count =

[alert for alert in alerts if alert['level'] == 'HIGH' or 'SEVERE']

if len(severity_count)> 0 :

send_slack_message()

You could filter the above to target only SEVERE severities.

Category

The following code only sends notifications when the target alert category of sanctioned entity (categoryId of 3) is found:

if total_alerts > 0:

for alert in alerts:

category_count =

[alert for alert in alerts if alert['categoryId'] == 3]

if len(category_count) > 0:

send_slack_message()

You could filter the above to target any desired category.

User group

The following code only sends notifications for user IDs (userId) specified in a list (for example, userid_list):

if total_alerts > 0:

for alert in alerts:

target_user_count =

[alert for alert in alerts in alert['userId'] in userid_list]

if len(target_user_count) > 0 :

send_slack_message()

You could specify multiple different lists of different groups of user IDs.

Resources

Asset tiers

What information do I need to send?

The asset's tier determines which request body properties are required. For mature and emerging assets, KYT populates transfer information with blockchain and pricing data.

For pre-growth assets, the additional information (for example, transferTimestamp or assetAmount) is optional, but we suggest supplying it for maximal value as KYT uses it to generate alerts.

In subsequent GET requests, KYT will return only the information supplied in the initial POST registration request.

Once a network graduates to the emerging or mature tiers, KYT will backfill the transfer with blockchain and pricing data.

For more information about KYT's functionality for each tier, see Asset coverage.

Mature and emerging

To register transfers on mature or emerging networks, you need to supply at minimum the following request body properties:

networkassettransferReference

Pre-growth

To register transfers from pre-growth networks, you need to supply at minimum the following request body properties:

networkassettransferReference

To maximize KYT value, we recommend you also supply the following request body properties:

transferTimestampassetAmountoutputAddressinputAddressesassetPriceassetDenomination

network and asset properties

The network property informs KYT which blockchain network the transaction occurred on. For example, values could be Ethereum, Avalanche, Bitcoin, Lightning, or other network names.

The asset property informs KYT of which asset or metatoken was used in the transfer. For example, if Ether was transacted, you would supply ETH as the value. If the ERC-20 Aave was transacted, you would supply AAVE as the value.

The combination of network and asset allows KYT to distinguish between assets that operate on two or more networks, such as the stablecoin USDT. USDT exists on multiple networks, including Ethereum, Tron, and Avalanche. Another example is bitcoin, which exists on both the Bitcoin and Lightning networks.

To register a transfer of USDT on the Avalanche network, you would supply "Network": "Avalanche", "Asset": "USDT". However, to register a transfer of USDT on the Ethereum network, you would supply "Network": "Ethereum", "Asset": "USDT".

For lists of the networks Chainalysis currently supports, see Mature and emerging networks and Pre-growth networks.

transferReference property

The transferReference property enables Chainalysis to locate the transfer on a blockchain and typically comprises a transaction hash and output address.

The transaction hash is a unique identifier a blockchain generates for each transaction and is used to identify the transaction. The output address is the destination address for funds within the transaction. It is used to determine the particular transfer within a transaction (which may contain multiple transfers). For received transfers, the output address is an address you control. For sent transfers, the output address is an external address.

Because blockchains use different record-keeping models (for example, UTXO or account-based), you must supply different values for the transferReference property depending on the blockchain. In our list of networks, we indicate the proper format for the property.

UTXO model

For networks using a UTXO model, you must reference a transaction hash and corresponding output address or corresponding transaction hash index:

{transaction_hash}:{output_address}, or{transaction_hash}:{output_index}

As an example, let's say you want to register a transfer with the transaction hash 9022c2d59d1bed792b6449e956b2fe26b44b1043bbc1662d92ecd29526d7f34e and an output address of 18SuMh4AFgTSQRvwFzdYGieHtgKDveHtc, which is in the 6th place in the transaction.

To register the above transfer using an output address, the property should look like this:

"transferReference": "9022c2d59d1bed792b6449e956b2fe26b44b1043bbc1662d92ecd29526d7f34e:18SuMh4AFgTSQRvwFzdYGieHtgKDveHtc"

To register the transfer using the output index, the property should look like this:

"transferReference": "9022c2d59d1bed792b6449e956b2fe26b44b1043bbc1662d92ecd29526d7f34e:5"- The output index value is

5because the index starts at 0.

- The output index value is

Account/balance model

For networks using an account model (for example, Ethereum and EVM-compatible), you must reference a transaction hash and corresponding output address: {transaction_hash}:{output_address}.

As an example, let's say you want to register a simple Ether transfer with the transaction hash 0xe823c9b7895f9c47985c80e4611272f8194403e885c9cc603422cd609d738098 and output address 0x3d21a92285bf17cbdde5f77531b8b58ac400288a.

To register this transfer, the property should look like this:

"transferReference": "0xe823c9b7895f9c47985c80e4611272f8194403e885c9cc603422cd609d738098:0x3d21a92285bf17cbdde5f77531b8b58ac400288a".

If registering a transfer that sent funds to a smart contract, use the smart contract's contract address as the output address. If registering a transfer that received funds from a smart contract, use the end user's destination address as the output address.

In transactions where an output address is used multiple times (for example, some interactions with smart contracts), KYT will register the first output address where the transferred amount exceeds 0.

Solana

When registering transfers of SOL, the transferReference property only accepts the System Account address. The property should be formatted like the following:

{transaction_hash}:{system_account_address}

When registering transfers of SPL tokens, the transferReference property accepts either the System Account address (the wallet address) or the Token Account address (the ATA address ). You can format the transferReference property in either of the following ways:

{transaction_hash}:{system_account_address}{transaction_hash}:{token_account_address}

When registering historical transfers of SPL tokens with a System Account address, use the System Account address that owned the Token Account at the time of transfer, which may not be the current address.

Other models

Some assets require a unique transferReference value that does not fit into the above schemas.

Monero

For deposits, you should format transferReference like the following:

{transaction_hash}:{output_index}:{receiving_address}:{payment_ID}.

For withdrawals, you should format transferReference like the following:

{transaction_hash}:{output_index}:{withdrawal_address}:{payment_ID}.

Lightning Network

For the Lightning Network, you must supply a combination of the payment hash and recipient node key. You should format transferReference like the following:

{payment_hash}:{node_key}.

To learn more, see Registering Lightning Network transactions and withdrawals.

Just a transaction hash

Some blockchain protocols require just a transaction hash and no output address or output index. We indicate these networks in the transferReference column of the network tables.

Tokens with nonunique symbols

In rare instances, two metatokens on a single network may share the same asset symbol. Since KYT uses the transferReference property to identify the transaction, nonunique asset symbols do not affect KYTs ability to monitor the transaction. In the extremely rare scenario that two metatokens shared an asset symbol and sent funds to the same outputAddress in the same transaction, KYT will register the first transaction seen. If this is the incorrect transaction, please contact Chainalysis Customer Support.

Polling the summary endpoints

Generally, KYT processes withdrawal attempts and transfers with blockchain confirmations in near real-time, thereby providing a quick risk assessment to make synchronous decisions. To verify KYT processed your request, you can call the summary endpoints (summary for withdrawal attempts or summary for transfers) and check whether updatedAt returns a non-null value. Once updatedAt returns a non-null value, you can attempt to retrieve alerts, exposure, or identifications.

Withdrawal attempts

KYT usually processes withdrawal attempts in less than a minute. You can begin polling the summary endpoint immediately after registering the withdrawal until updatedAt returns a non-null value, at which point you can begin checking for any generated alerts, exposure, or identifications.

While KYT often processes withdrawal attempts quickly, we still recommend setting a policy for how long to wait in case of latency or updatedAt does return null for an extended amount of time.

Transfers with confirmation

Assuming you have made the initial POST request after a block has been confirmed on the network, most transfers process in less than a minute (see the table below for more information). However, we do suggest building a buffer for instances of increased latency and setting a policy for how long to wait before crediting a user's account (for received transfers).

Many services require a few confirmations before crediting a user's funds, which usually takes several minutes. It is during this time that you can begin polling the GET /transfers/{externalId} endpoint.

Transfers without confirmation

If you register a transfer before it has any confirmations, processing times may increase as KYT first needs to validate the transaction exists on a blockchain, and updatedAt will remain null for longer.

If you register a transfer well before it is confirmed (for example, during high network congestion), KYT may take longer to process the transfer. We recommend registering the transfer in KYT either after it is confirmed on the blockchain or shortly before.

KYT processing times per network

KYT begins processing block data after a transaction achieves a predetermined number of confirmations. Often this threshold is a single confirmation, but some networks require more (for example, EOS). Additionally, the speed at which networks acquire confirmations varies from network to network (for example, Bitcoin’s block time is ~10 minutes while Ethereum’s block time is ~12-14 seconds).

See the table below for KYT’s confirmation threshold and approximate KYT processing time per network. Note that our team is continually working to improve the speed at which we ingest and process block data.

| Network | Confirmations required for KYT to ingest block data | After the threshold is met, the approximate KYT processing time | |

|---|---|---|---|

| Bitcoin | 1 confirmation | Approx. 30 seconds | Caution: These times are estimates, and sometimes latency can fluctuate. After the threshold, KYT may take additional time to process depending on various factors, such as how long before confirmation you submitted a transfer. We suggest building a buffer in your internal system to account for potential irregularities. |

| Bitcoin Cash | 1 confirmation | Approx. 10 seconds | |

| Bitcoin SV | 1 confirmation | Approx. 1 minute | |

| Ethereum | 1 confirmation | Approx. 5 seconds | |

| Ethereum Classic | 1 confirmation | Approx. 5 minutes | |

| Litecoin | 1 confirmation | Approx. 10 seconds | |

| EOS | 360 confirmations | Approx. 3 minutes | |

| XRP | 1 confirmation | Approx. 5 seconds | |

| Zcash | 1 confirmation | Approx. 5 seconds | |

| Dogecoin | 1 confirmation | Approx. 5 seconds | |